This is the second part of my series on the architecture of SharePoint 2010 Search.

SharePoint 2010 Search Component Scaling

As I mentioned the SSP was one of the biggest constraints to SharePoint 2007 Search’s ability to scale. Now that is gone, we have a ton more flexibility.

Let’s talk about the components I introduced earlier and how each are commonly scaled.

Crawler – There can be multiple instances of the crawler component within a single search application service. This will allow you to more efficiently crawl content based on the scenario you need to support.

One example would be adding a second crawl component (on a different server) to improve the performance of performing a crawl. As I mentioned the crawl component is stateless and data about what has or has not been crawled is stored in the crawl database. So you can create multiple crawl components that are configured to use the same crawl database which will help with the performance for building the index (i.e. multiple crawlers running in parallel).

Another example would be you want to dedicate higher end machines with crawl components to particular content sources. For instance let’s say there is content to be indexed on SharePoint and in File Shares, and there is significantly more data on the File Share. You have the ability to create new crawler components that has a dedicated crawl database. Using host distribution rules, you can configure a new crawl component and crawl database to only crawl content in the File Shares while others crawl SharePoint content.

Some other guidelines you should be aware of are:

- There should be at least 4 cores dedicated for each crawl component running on a server.

- Not recommended to have more than 16 crawler components in a single Search Service Application.

Crawl Database – Multiple instances of the crawl database can be created to support different scaling scenarios as just mentioned. It is not recommended to have more than 25 million items in a single crawler database and no more than 10 crawl databases per Search Service Application.

Query Component – In SharePoint 2007 we had the ability to run multiple instance of the query component on each load balanced WFE so we had the ability to some scaling. We now have more granular control of how we scale out multiple query components. We still have the ability to create multiple instances of a query component for redundancy purposes.

Index Partition – As I mentioned the Index Partition was added to allow for queries to perform more efficiently. Each query component has an associated partition. Whenever a new partition is created, a new query component must be created as there is a one-to-one relationship between the query component and the index partition. There is an emphasis to ensure that each index partition is evenly balanced with documents (a hash algorithm is used based on the document id).

For example, let’s say you have 2 million documents in your single index and it takes 1 second to return the results. If this were split into two partitions with two query components, the time to complete the search is cut in half because now there are two query components only search 1 million documents.

Couple Notes:

- It is not recommended to exceed more than 20 index partitions within a single Search Application Service instance; even though the hard boundary is 128 index partitions.

- There is a hard limit of 10 million items that can be in any index partition with SharePoint 2010 Search, and it is not recommend exceed 10 partitions (100 million items). If you are exceeding that many items, you will probably look into separating search into multiple search service instances or look into using FAST.

Index Partition Mirror – Earlier I introduced the content of index mirrors as a way to provide redundancy and better performance. The mirror is how it sounds; it is an identical copy of the index. When mirrors are used, you basically now have a one-to-many relationship between the query component and the index partitions but there will only be one primary partition. The mirror partitions can be used a way to provide redundancy so if the machine that is hosting the primary partition was to fail, the mirror will become primary. Also, the mirror can be configured so it can be search against as well to help support scenarios where there is a load of queries being made.

Property Database – There can be more than one property database created in the farm to again support scaling and query performance. It is recommended that once there 25 million items that have been indexed, a new property database should be introduced into the solution architecture. This is because with that many items, a significant amount of metadata will be created and the property database is responsible for managing that metadata for search queries. This is not hard limit; just a recommendation.

It is also recommended to separate the Property and Crawl databases to separate storage to remove I/O contention that can occur when there are both crawls and queries executing at the same time. This is because the property database is highly utilized as part of the querying process. If full crawls are done during off hours and incremental crawls are not very intensive based on the amount, this should not be an issue.

Search Admin Component – There is no capability to scale this; not needed either.

Search Admin Database – Since I mentioned this earlier, I will mention it to be consistent. There is no capability to scale the search admin databases since there can only be one per Search Application Service instance. You can create redundancy at the SQL Server level.

SharePoint 2010 Search Scaling Scenarios

Up to this point you have been consuming a lot of information about what the new SharePoint 2010 Search components are and how they can scale. It is a lot to take in. What I personally like to do is start with the most basic scenario and then scale out from there.

I am going to focus each scenario based on the number of items to be searched.

To save myself time, I am going to re-use several of the diagrams that are provided to us here - http://technet.microsoft.com/en-us/library/cc263199.aspx. Specifically I am using diagram from “Search Architectures for Microsoft SharePoint Server 2010” and “Design Search Architectures for Microsoft SharePoint Server 2010” diagrams. I highly recommend reading both of these in detail once you have finished reading my blog as re-enforcement.

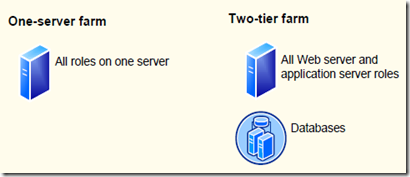

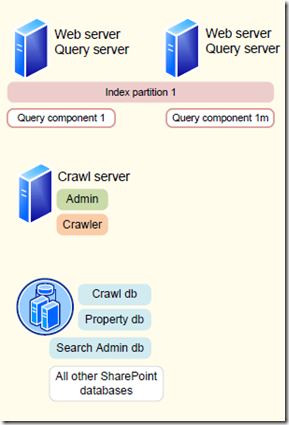

Scenario 1 – 0 to 1 Million Items

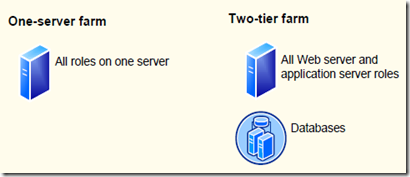

This is probably one of the most basic scenarios but according to Microsoft either of the farms can support this many documents.

HOWEVER I would never recommend these two environments for production where a Service Level Agreements (SLAs) might be in place because it is not a redundant. I would expect out of the gate, to minimally have something like below. This is a best practice from SharePoint architecture perspective to have your Web Front Ends (WFEs) and Application Servers on different machines. As well, you typically have the search service dedicated to its own Application Server as to not create contention with other services that may be running in the farm.

I think the point at the end of the day is that a single application server, that running all of the Search components we described earlier, can support up to a 1 million items. So if you are running a small SharePoint production site, where SLAs are not stringent, you should be fine using search on one application server for up to 1 million items.

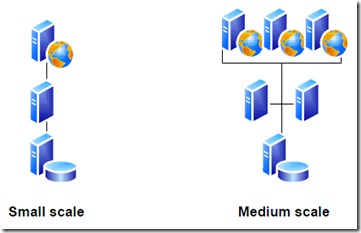

Scenario 2 – 1 to 10 Million Items

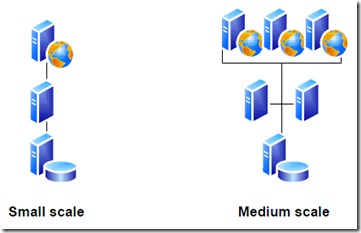

In this scenario is we now have a few million items and at the point where scale must be introduced. This is referred to as a Small Search Farm.

Observations:

- There is a single crawl server with a single crawl component that builds a single index of all content.

- There is a single database server used where the crawl, property and admin databases are hosted.

- There are two query servers and they have been configured to run on each web front end (WFE). This means the WFE needs to have enough space to store the index.

- There is only one index partition created by the crawler where all of the indexed items can be found.

- There is one primary query component and there is one mirror query component. All the queries will go against primary query component and will failover to the second.

- One question you may have is will only having one active query component become performance bottleneck? The answer is it could if you have a website with a lot of users performing concurrent queries. Remember you can configure the query component mirror to accept queries.

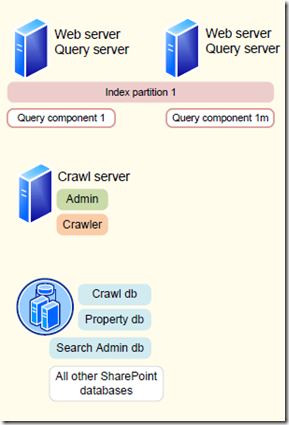

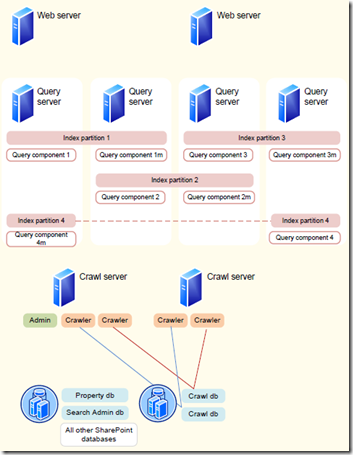

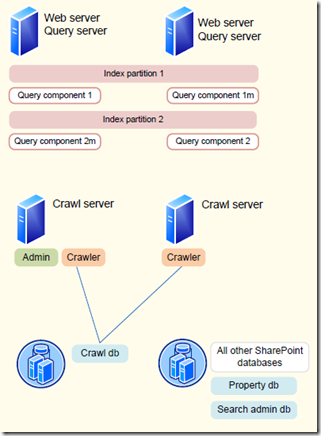

Scenario 3 – 10 to 20 Million Items

Next we have a scenario where we have roughly 10 to 20 million items to be indexed and we want to start scaling the architecture. This is the one that many organizations will use to start with because it scaled for redundancy and performance. So even though you may not have that much content right off the bat, you will be able to grow into it.

Observations:

- There are now two crawler components that reside on different machines. The two crawler components that work with a single crawl database in parallel to build up the index. This also adds a level of redundancy if one of the crawl servers were to go down.

- There are now to partitions of the index, mostly based on 10 million item limit for each index partition. The need for two index partitions will require that two query components are created. The primary for each query component is installed on a different Query Server with the query mirror installed on the other machine. The net effect of this is query time will be reduced because the query component does not have to search the entire index; it only has to search half the index.

- The Crawl and Property databases have been split apart onto different servers. This is because contention can be created between them when crawling and querying occurs concurrently. I really think this is probably one of the last things you need to do to improve performance if you have a highly available SQL Server production environment. This will only help when there are database load issues, there are more than 25 million items or there is a significant amount of metadata that has been built up.

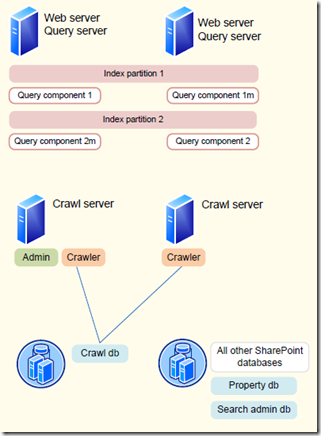

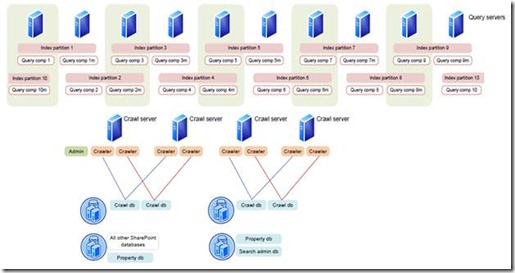

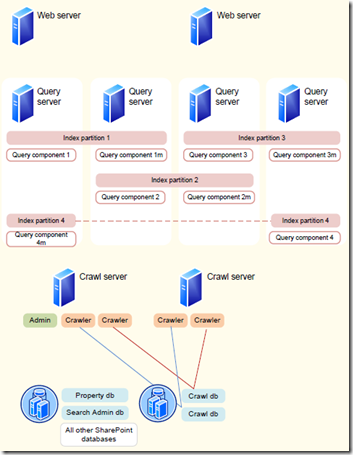

Scenario 4 – 20 to 40 Million Items

In this next diagram the amount of content is dialed up to 20 to 40 million items.

Observations:

- This is still actually referred to as a medium farm but it is called a dedicated farm because the Query components are no longer hosted on the web front ends. The query components are not hosted on individual machines.

- Next you will notice there are now four index partitions for the corresponding four query components. As well, the mirror for each query partition is placed on a different query server.

- Next notice there are now two crawl databases and four crawler components. Two crawler components are dedicated to each crawler database. This configuration supports the ability to have different crawler components search different content sources. For instance one set of crawl components may search SharePoint while the other set search MySites, File Shares and Public Exchange Folders. The point is that if you have scaled up to searching this amount of content is likely that you will be breaking about how content is indexed for performance reasons.

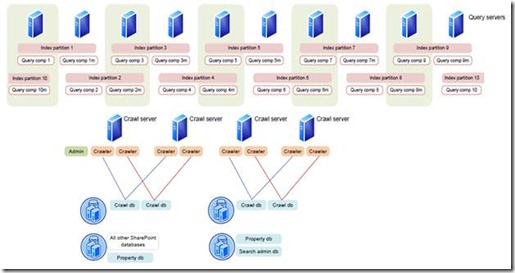

Scenario 5 – 40 to 100 Million Items

Last is a fully scaled out SharePoint Search Application service instance.

Observations:

- This is a fully scaled out farm based on the recommended capacity for SharePoint 2010 search, being 100 million items. As you can see there are now ten index partitions.

- There are several crawl components and crawl servers.

- Crawl databases have been broken into multiple database servers.

- Multiple property databases on different database servers.

Personally I am not sure how common you will see something like this. Do I believe more than 100 million items will need to be indexed by SharePoint? Yes – I do believe that will happen in large environments. However you may employ a strategy where you have:

- Multiple Search Application Service instances in the farm with less data being indexed by each.

- There may be a central hosted search farm while smaller farms have their own search configuration.

- And when you need to provide a single user search experience where more than 100 million items need to be searched; FAST can be utilized. I want to point out that even if you have less than 100 million items to be indexed FAST should still be considered. This is because there is a ton of search and usability features available in FAST which is not available in the out of the box SharePoint search.

Closing

To this point I discussed only how to scale based on the number of items to be indexed. However that is not really an accurate way to build a SharePoint 2010 Search architecture. In many cases business rules or other environment factors can drive how search is architected. Here are some examples:

- Connectivity to specific content locations is slower in some cases. What you may do is add dedicated crawler components and databases just for indexing this specific content.

- Content that is indexed must be fresh and full indexes are needed on a regular basis. In this scenario you will again add more crawl components.

- There is a significant amount of users who will query. In this case, you may only have 5 million items but you may have two or even three query components dedicated to their own machines.

Hearing these examples, you should take the information above and start scaling out based on the business requirements and SLAs you need to support.

My personal recommendation is probably start with scenario 3 and make any tweaks to the configuration based on what you need for a production environment that needs to support growth and redundancy. However adding new components later is no big deal either.

Now that you have finished reading this – I highly recommend you read both of these:

![clip_image002[1] clip_image002[1]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEgzQ_9B7-lCpbKXXy8M8K5hpET2aWgIRX9X4Wg5HqQlv_1ooTinGnzuPj_SH3frxWZKEJPI2G92fxsNJ1K7K_3WbC8bhjHzc2lYm-_MeKmlzYvweyb6PcXAkvhliuSI4aI1qhSkaTp6f0aE/?imgmax=800)